Products and Draft Patents

AuditoryAI

The invention is an new process to register the existinance of sound objects,localize them, prioritize them, and direct the behaviour of an agent (e.g. a robot) towards them.

gazeTracking

The product endows intelligent system with one camera to infer the gaze direction by looking at the head orientation and the iris position.

-----------------------

------------------------

-------------------------

Testimonials

-------------------------------------------------

Prof. Samia Nefti Meziani/Robotics and Automation, Salford University

--------------------------------------------------

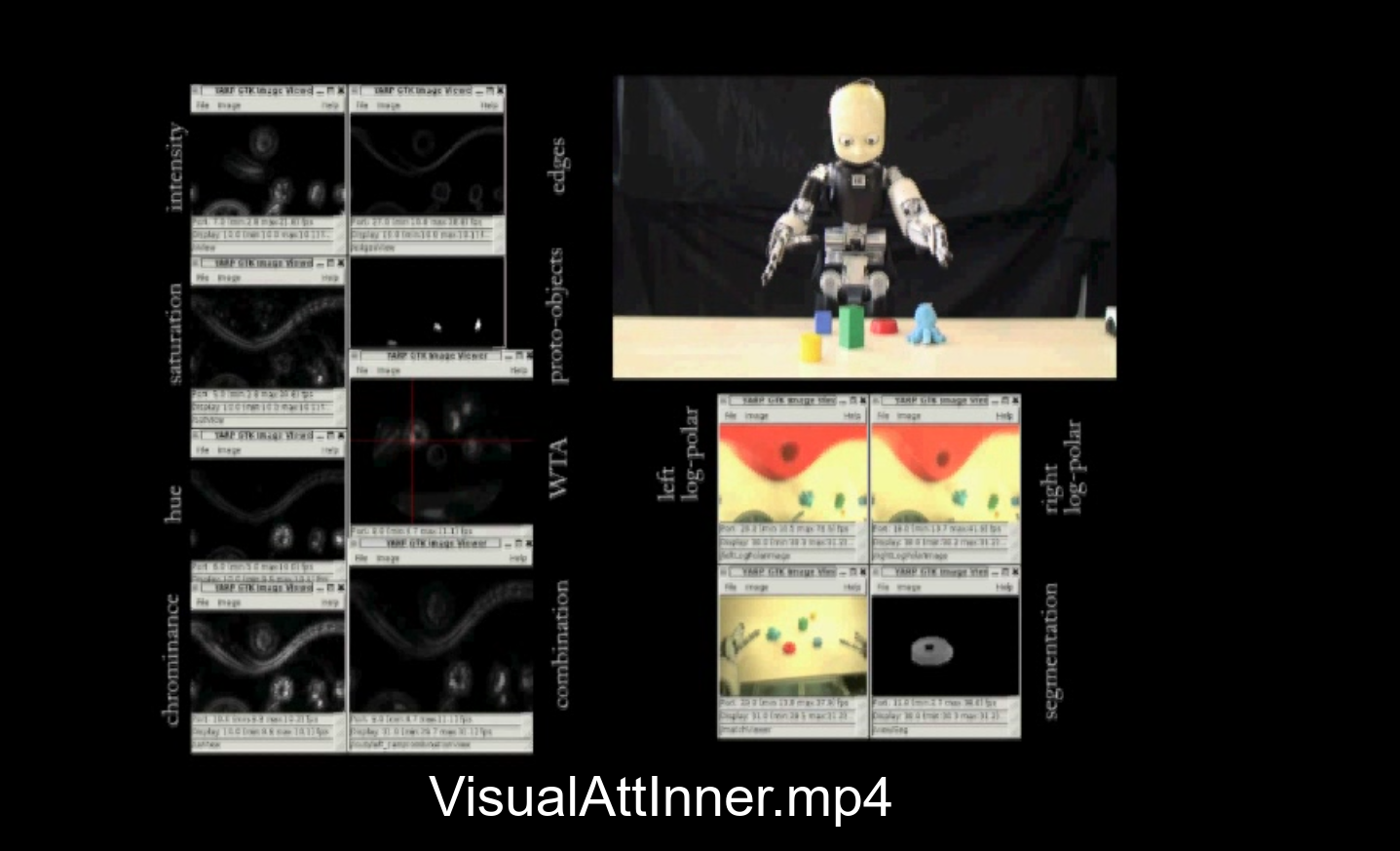

Prof. Davide Brugali/ Robotics, Universita degli Studi di Bergamo log-polar visual attention system and oculomotor control

log-polar visual attention system and oculomotor control

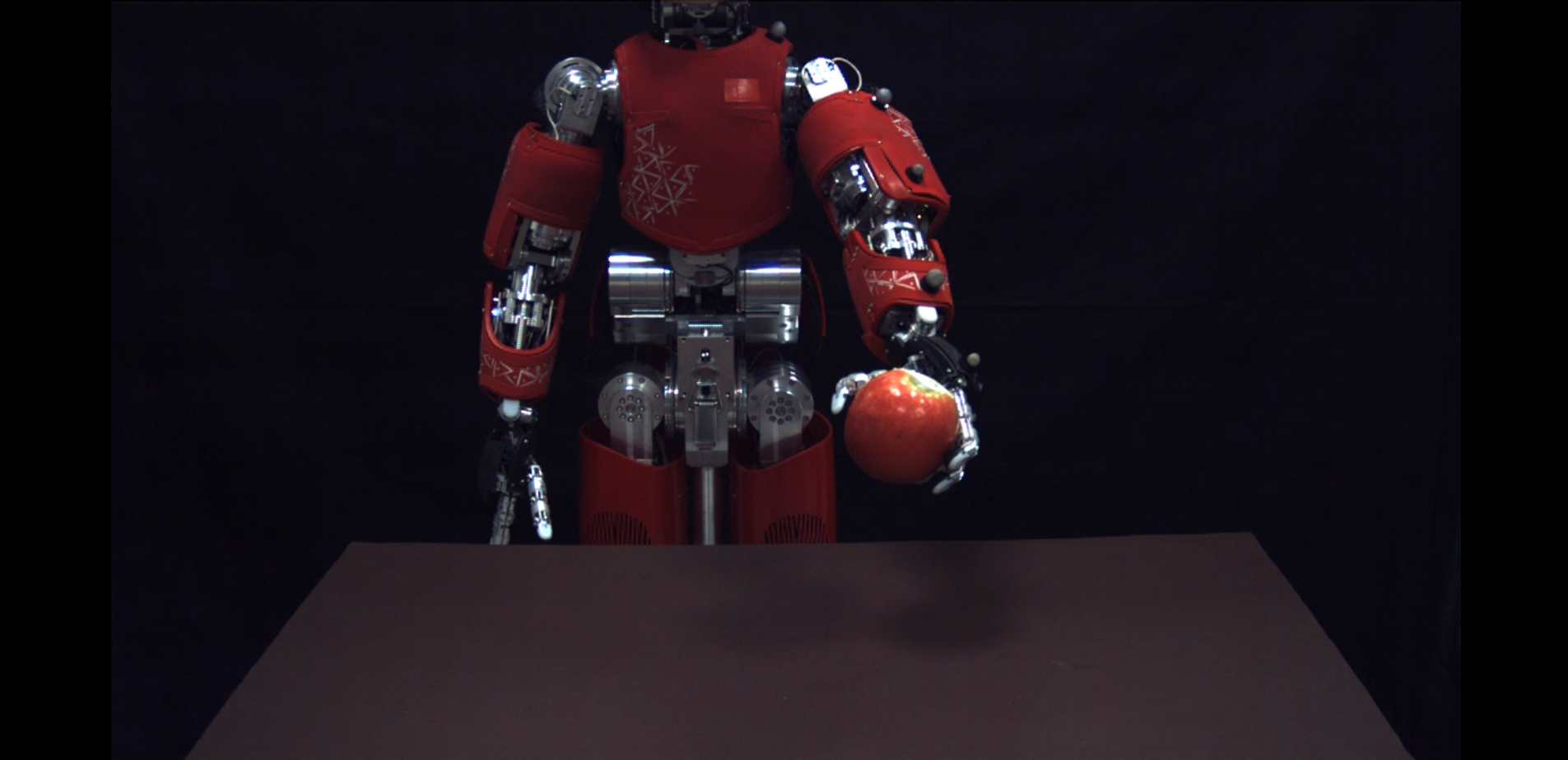

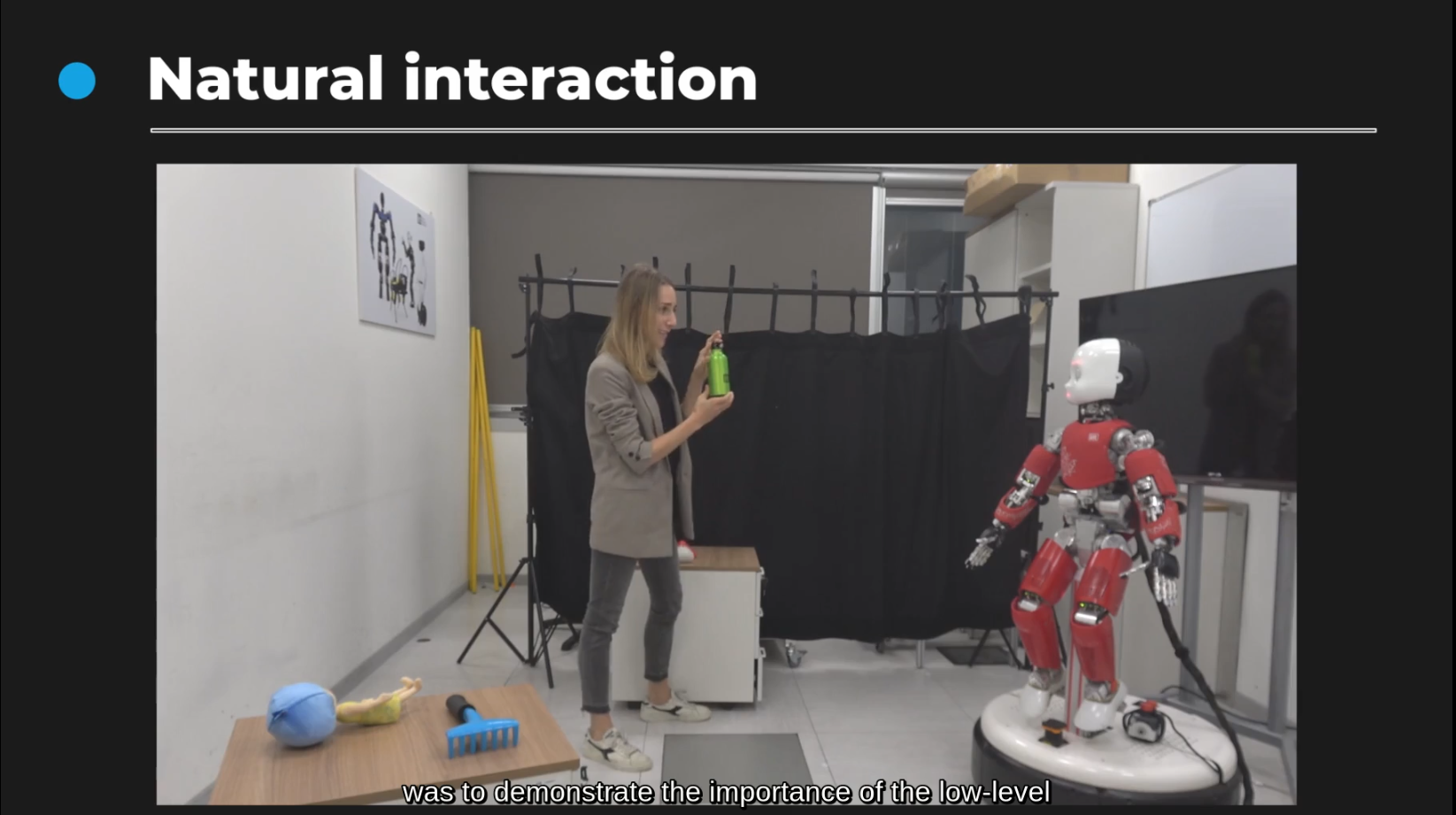

biological motion in control of the humanoid robot end-effector

biological motion in control of the humanoid robot end-effector

AudioVisual cognitive architecture for autonomous learning

AudioVisual cognitive architecture for autonomous learning